The narrative surrounding cloud computing is undergoing a seismic shift. For over a decade, the conversation was dominated by infrastructure as a service (IaaS) the fundamental ability to rent compute power and storage buckets scaling on demand. Amazon Web Services (AWS) pioneered this model, securing its position as the titan of the industry. However, as we navigate the current technological landscape, simply hosting data is no longer sufficient. The new frontier, and the arena where the next decade of digital dominance will be decided, is scalable, enterprise-grade Artificial Intelligence.

- The Strategic Pivot: From EC2 Instances to Neural Networks

- Decoding the AWS Generative AI Stack

- The Critical Imperative of Enterprise Data Governance and Security

- MLOps: The Industrialization of Machine Learning

- Real-World Applications Driving High-Value Adoptions

- The Competitive Landscape and Future Outlook

- The Inevitable Transformation

In today’s rapidly evolving market, CTOs and IT leaders are facing daily pressure to integrate intelligent automation. The directive is clear: move beyond experimental sandboxes and deploy generative AI solutions that drive tangible business value. This transition marks a crucial pivot point for AWS. While its history is rooted in being the world’s computer closet, its legacy will almost certainly be defined by its ability to serve as the global brain for enterprise AI application development.

We are witnessing a transformation where the underlying infrastructure, while vital, is becoming commoditized. The real value proposition now lies up the stack in the platform layer, where machine learning models are trained, fine-tuned, and deployed at scale.

The Strategic Pivot: From EC2 Instances to Neural Networks

To understand where AWS is heading, we must appreciate the trajectory of enterprise technology needs. In the early 2010s, the primary challenge for businesses was escaping the capital expenditure trap of on-premises data centers. AWS provided the escape velocity with Elastic Compute Cloud (EC2) and Simple Storage Service (S3).

Today, the challenge is vastly different. Companies are drowning in data but starving for insights. The current daily reality for a Fortune 500 company involves managing petabytes of unstructured information customer interactions, internal documents, IoT sensor logs that traditional analytics cannot effectively process. This is where generative AI enters the frame, offering the ability to synthesize, create, and reason over vast datasets.

AWS has recognized that maintaining its leadership requires more than just having the most data centers globally. It requires providing the most robust ecosystem for building intelligent applications. The source material for this strategic outlook highlights that AWS’s future success hinges on its AI capabilities. This is not merely an add-on feature; it is a fundamental re-architecture of their value proposition to the enterprise market.

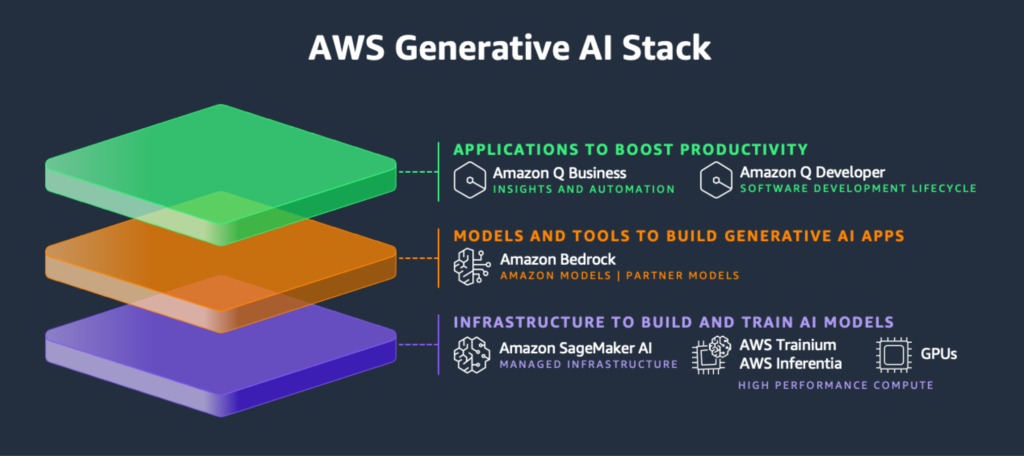

Decoding the AWS Generative AI Stack

For enterprise decision-makers evaluating cloud AI platforms, the AWS approach is distinguished by a focus on choice, security, and infrastructure optimization. Unlike competitors who may push a single monolithic model ecosystem, AWS is building a pragmatic workbench designed for diverse corporate needs.

The Silicon Advantage: Trainium and Inferentia

A critical, often overlooked aspect of the AI revolution is the massive computational cost involved. Training large language models (LLMs) and, more importantly, running inference (the process of the model generating answers) on them daily requires immense GPU resources. The current global shortage of high-end GPUs has created a bottleneck and unpredictable cost structures for businesses.

AWS is addressing this through vertical integration into the silicon layer. By designing their own chips specifically optimized for machine learning workloads, namely AWS Trainium for model training and AWS Inferentia for inference, they are attempting to break the reliance on third-party hardware constraints.

For a CFO looking at the total cost of ownership for an AI implementation, this is highly relevant. Custom silicon allows AWS to offer better price-performance ratios for heavy AI workloads. It means that as an enterprise scales its use of a customer service bot from ten thousand queries a day to ten million, the cost curve remains manageable. This infrastructure-level optimization is a classic AWS playbook move applied to the new reality of neural network computing.

Amazon Bedrock and the Foundation Model Marketplace

Perhaps the most significant development in AWS’s recent strategy is Amazon Bedrock. In the dynamic world of generative AI, new foundation models are released almost weekly, each with different strengths, weaknesses, and licensing structures.

Bedrock operates as a managed service that offers access to high-performing foundation models from leading AI startups like Anthropic (the creators of Claude), Stability AI, and AI21 Labs, alongside Amazon’s own family of Titan models.

The strategic brilliance here lies in offering optionality. An enterprise might require Anthropic’s Claude for complex reasoning and extensive context windows in legal document analysis, while simultaneously needing Stability AI’s models for generating marketing imagery. Bedrock provides a unified API to access these diverse tools without requiring the customer to manage the underlying infrastructure for each one.

This “model agnostic” approach is highly appealing to large organizations that do not want to be locked into a single AI vendor’s ecosystem. It allows businesses to swap models in and out as technology evolves, future-proofing their AI investments.

The Critical Imperative of Enterprise Data Governance and Security

If compute power is the engine of AI, data is the fuel. However, for regulated industries like healthcare, finance, and government, using public AI models presents unacceptable risks regarding data privacy and intellectual property leakage.

The daily headlines regarding data breaches underscore the necessity of robust security perimeters. A major corporation cannot risk pasting sensitive financial projections into a public-facing chatbot. This is where AWS leverages its long-standing reputation for enterprise-grade security.

AWS’s proposition focuses heavily on the ability to fine-tune models privately. They ensure that when a company uses its proprietary data to customize a foundation model within Bedrock or SageMaker, that data and the resulting tuned model remains isolated within the customer’s virtual private cloud (VPC). It is never used to train the base models that other customers access.

This assurance of data sovereignty is the deciding factor for rapid enterprise adoption. It allows CIOs to greenlight generative AI projects with the confidence that their corporate IP will not leak into the public domain. The ability to maintain strict access controls, encryption standards, and compliance certifications (like HIPAA or GDPR) within the AI workflow is a major differentiator for high-stakes business environments.

MLOps: The Industrialization of Machine Learning

Moving beyond the hype of generative AI requires addressing the practical challenges of deployment. Many organizations find that building a model is only twenty percent of the battle; the remaining eighty percent involves integrating it into existing applications, monitoring its performance, managing versions, and retraining it as data drifts.

This discipline is known as Machine Learning Operations, or MLOps. Amazon SageMaker has evolved into a comprehensive platform designed to handle this entire lifecycle. SageMaker is not just for data scientists building experimental models in notebooks. It is an industrial-grade suite of tools for deploying models at the edge, managing feature stores, and automating CI/CD pipelines specifically for machine learning.

For enterprises, scalable MLOps is the difference between a successful pilot project and a production system that drives revenue. SageMaker provides the governance rails needed to ensure that models deployed in production are reliable, explainable, and auditable. This industrialization of AI processes is crucial for moving beyond ad-hoc implementations toward systemic organizational intelligence.

Real-World Applications Driving High-Value Adoptions

The shift towards AWS as an AI platform is being driven by concrete use cases that offer significant return on investment across various sectors.

Revolutionizing Customer Experience

Enterprises are utilizing AWS generative AI services to build next-generation conversational agents. Unlike rigid, rules-based chatbots of the past, these new agents can understand intent, maintain context over long conversations, and interact with backend systems to perform tasks. This leads to higher call deflection rates in contact centers and improved customer satisfaction scores.

Accelerating Software Development

Developer productivity is another major arena. Tools powered by AWS AI models are being integrated into integrated development environments (IDEs) to provide real-time code suggestions, generate unit tests, and assist with legacy code modernization. For large enterprises with thousands of developers, even a small percentage increase in coding efficiency translates into massive cost savings and faster time-to-market for new features.

Enhancing Knowledge Management

Large organizations are plagued by information silos. Employees spend hours daily searching through internal wikis, SharePoint sites, and documents to find crucial information. AWS generative AI is being used to create semantic search interfaces that allow employees to ask questions in natural language and receive synthesized answers drawn from across the organization’s entire knowledge base.

The Competitive Landscape and Future Outlook

While Microsoft Azure has made significant waves through its tight partnership with OpenAI, and Google Cloud continues to leverage its deep research roots in AI, AWS occupies a unique position.

AWS’s strength lies in its massive existing customer base. It is generally easier for an enterprise already running 80% of its workload on EC2 and S3 to adopt Bedrock or SageMaker than it is to migrate data to a competing cloud solely for AI capabilities. The gravity of data plays a significant role here; bringing the AI compute to where the data already resides is often the most pragmatic and cost-effective strategy.

Furthermore, the AWS partner ecosystem is vast. Thousands of systems integrators and independent software vendors are building solutions on top of the AWS AI stack. This network effect accelerates adoption as partners bring industry-specific AI solutions to market, further embedding AWS into the fabric of enterprise operations.

Looking ahead, we can expect continued aggressive investment from AWS in all layers of the AI stack. This will likely include further iterations of their custom silicon to drive down inference costs, the addition of more specialized models to Bedrock, and high-level abstraction services that make it easier for business analysts not just data scientists to leverage AI tools.

The Inevitable Transformation

The transition of Amazon Web Services from an infrastructure utility to an intelligence platform is not just a strategic choice; it is an inevitability dictated by the market. The cloud wars of the last decade were fought over storage and basic compute. The cloud wars of the next decade will be fought over model performance, inference costs, and the ease with which businesses can turn generative AI into a competitive advantage.

By focusing on a flexible, secure, and cost-optimized approach to enterprise AI, AWS is positioning itself to define this new era. While their dominance in IaaS built the foundation of the modern internet, history will likely record that their most significant contribution was democratizing access to the powerful AI tools that reshaped the global economy. For the enterprise CTO balancing innovation with risk, the AWS roadmap offers a pragmatic path toward an intelligent future.

Source Reference:

The foundational concepts regarding AWS’s strategic shift toward AI success discussed in this article refer to themes explored in news analysis such as: https://www.artificialintelligence-news.com/news/awss-legacy-will-be-in-ai-success/